The AWS SDK for Salesforce makes it easy for developers to access Amazon Web Services in their Apex code, and build robust applications and software using services like Amazon S3, Amazon EC2, etc.

Docs - github.com/mattandneil/aws-sdk

Install - /packaging/installPackage.apexp?p0=04t6g000008SbOb

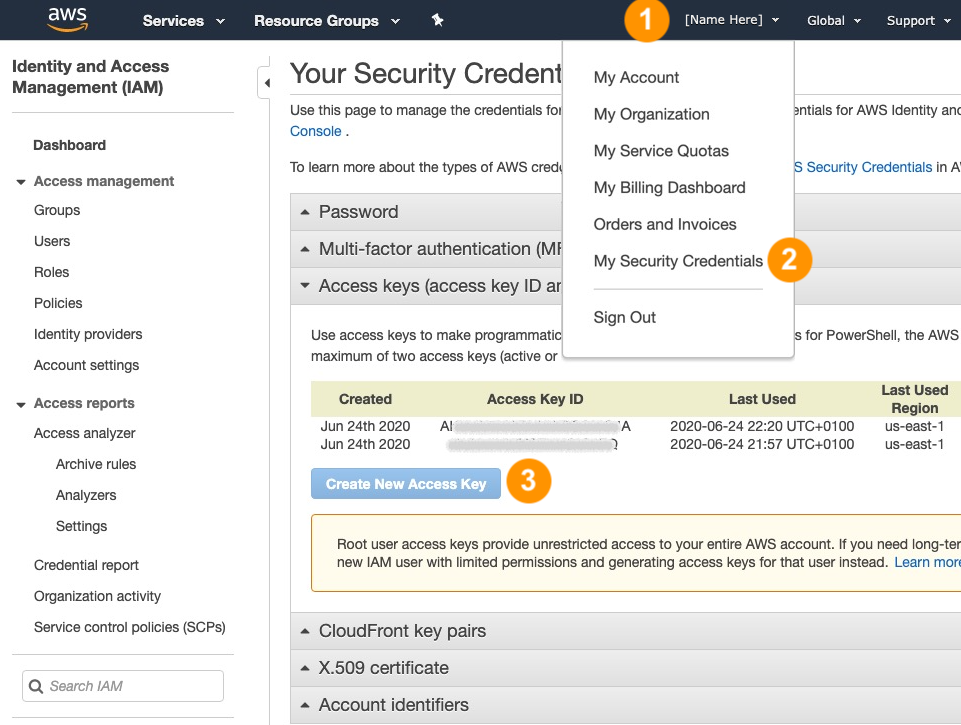

Sign up then go to your AWS Console > Security Credentials > Access Keys:

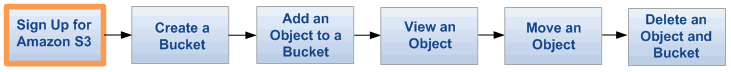

Amazon Simple Storage Service (S3) SDK

The Apex client manipulates both buckets and contents. You can create and destroy objects, given the bucket name and the object key.

Create a bucket:

AWS.S3.CreateBucketRequest request = new AWS.S3.CreateBucketRequest(); request.url = 'https://s3.us-east-1.amazonaws.com/testbucket1'; AWS.S3.CreateBucketResponse response = new AWS.S3.CreateBucket().call(request);

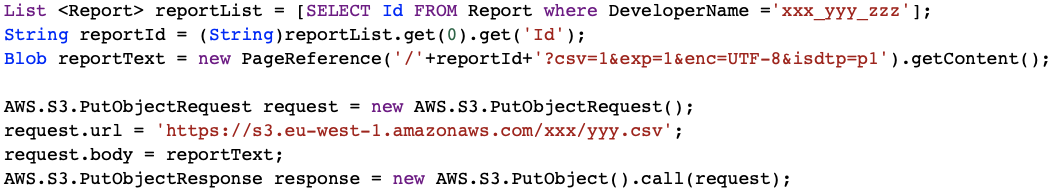

Add an object to a bucket:

AWS.S3.PutObjectRequest request = new AWS.S3.PutObjectRequest();

request.url = 'https://s3.us-east-1.amazonaws.com/testbucket1/key.txt';

request.body = Blob.valueOf('test_body');

AWS.S3.PutObjectResponse response = new AWS.S3.PutObject().call(request);View an object:

AWS.S3.GetObjectRequest request = new AWS.S3.GetObjectRequest(); request.url = 'https://s3.us-east-1.amazonaws.com/testbucket1/key.txt'; AWS.S3.GetObjectResponse response = new AWS.S3.GetObject().call(request);

List bucket contents:

AWS.S3.ListObjectsRequest request = new AWS.S3.ListObjectsRequest(); request.url = 'https://s3.us-east-1.amazonaws.com/testbucket1'; AWS.S3.ListObjectsResponse response = new AWS.S3.ListObjects().call(request);

Delete an object:

AWS.S3.DeleteBucketRequest request = new AWS.S3.DeleteBucketRequest(); request.url = 'https://s3.amazonaws.com/testbucket1/key.txt'; AWS.S3.DeleteBucketResponse response = new AWS.S3.DeleteBucket().call(request);

Amazon Elastic Cloud Compute (EC2) SDK

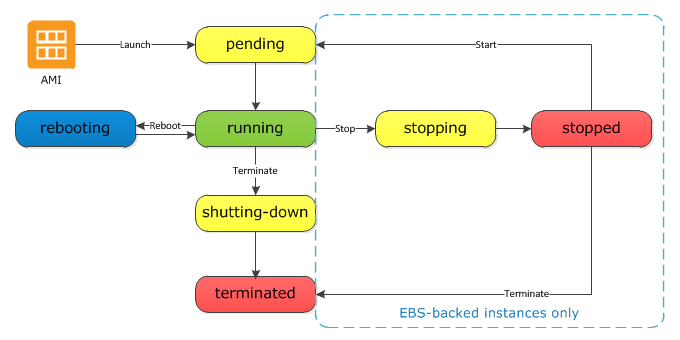

EC2 provides scalable computing capacity in the cloud. The Apex EC2 client calls services to launch instances, terminate instances, etc. The API responds synchronously, but bear in mind that the instance state transitions take time.

Describe running instances:

AWS.EC2.DescribeInstancesRequest request = new AWS.EC2.DescribeInstancesRequest(); request.url = 'https://ec2.amazonaws.com/'; AWS.EC2.DescribeInstancesResponse response = new AWS.EC2.DescribeInstances().call(request);

Launch a new instance:

AWS.EC2.RunInstancesRequest request = new AWS.EC2.RunInstancesRequest(); request.url = 'https://ec2.amazonaws.com/'; request.imageId = 'ami-08111162'; AWS.EC2.RunInstancesResponse response = new AWS.EC2.RunInstances().call(request);

Terminate an existing instance:

AWS.EC2.TerminateInstancesRequest request = new AWS.EC2.TerminateInstancesRequest(endpoint);

request.url = 'https://ec2.amazonaws.com/';

request.instanceId = new List<String>{'i-01234567890abcdef'};

request.dryRun = true;

AWS.EC2.TerminateInstancesResponse response = new AWS.EC2.TerminateInstances().call(request);